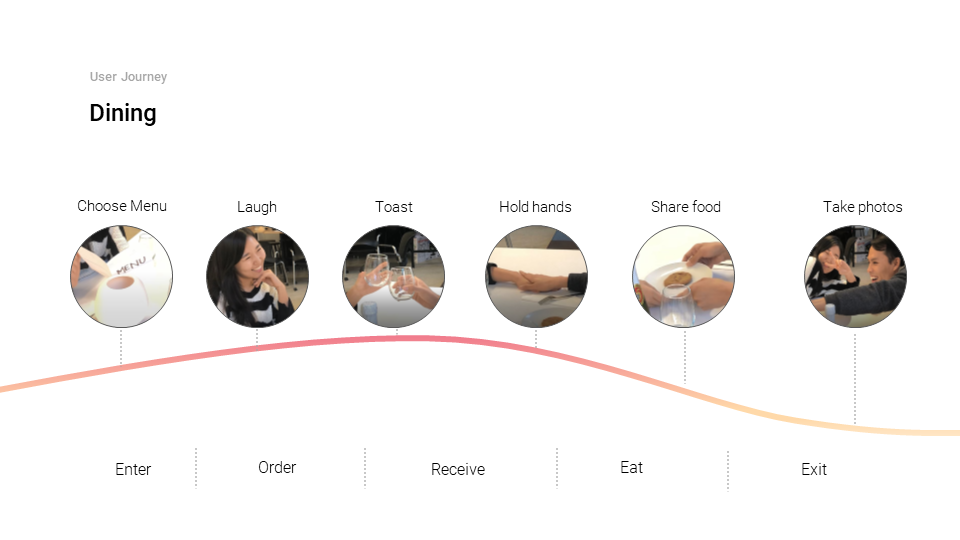

After both of the parties "arrive" through virtual chat technology, they place their hands on transparent menus atop the table. The menu then scans their historical dining data and makes themed tasting recommendations based on the couple's preferences.

A timeline visualizing the five basic tastes (sweet, salty, sour, bitter, umami) begins flowing on the table and corresponds to each dish served.

A video camera captures and recognizes each participant’s emotions. The Data Date system accordingly analyzes the couple's alignment on six key emotions (happiness, sadness, anger, disgust, surprise, and fear) and provides a visualization.

The artifact at the center of the table communicates each person’s pulse through soft vibration, as if the couple were physically holding hands and feeling heartbeats.

At the end of the meal, each person receives a souvenir that, when scanned with smart glasses, replays joyful moments from the date as augmented-reality (AR) videos.